You must be signed in to read the rest of this article.

Registration on CDEWorld is free. You may also login to CDEWorld with your DentalAegis.com account.

Evidence-based care had its early political beginnings with the first appeals by Archibald Cochran1 in 1972 to require scientific justifications as part of the decision-making process. Cochran is often credited with being the “father” of modern evidence-based medicine. Until that time, most medical (and dental) practice was based on tradition or clinical preference rather than analysis of the information in the published literature and consideration of patient factors. Table 1 provides a list of useful resources.

Evidence-based medicine (EBM) or dentistry (EBD) is a combination of three key criteria that are the foundation for decision-making: 1) best evidence; 2) clinical expertise; and 3) patient values. This practice triad is logical but often misinterpreted. Clinical expertise includes consideration of an individual’s clinical talents and personal experiences. Patient values take into account the cultural, socioeconomic, and other special patient values influencing choices. Best evidence for EBD is filtered from all the available evidence about the clinical question of interest. All three criteria are of equal importance. Several common misconceptions arise. First is that EBD is only about evidence, when, in fact, it involves all three parts of the triad. Second is the notion that identifying a publication or source of agreement for a given decision is sufficient evidence; however, evidence is based on examining all available information in an unbiased and systematic way.2 Third is that evidence is truth. It is not; evidence is the collection of scientific information that is available at a specific time, but it is typically incomplete and can be misleading.

While different definitions may be cited for EBM or EBD, the traditional one is “…the conscientious, explicit and judicious use of current best evidence in making decisions about the care of individual patients…”.3 Notice again that the focus tends to be on the evidence portion of the triad, yet all three components are equally important.

Determining how much evidence actually exists has long been difficult. What is generally accepted is that very little evidence is known. An anecdotal estimate is that there is only about 8% to 10% evidence to support what occurs in general dental practice.4,5 This is partly because good evidence usually is associated with a well-conducted clinical trial that has been extensively peer-reviewed and published in a reputable journal. Clinical trials are expensive, not often done, and may only cover a few of the variables of interest for an issue. They also require several years to collect the answer, and even more years for that information to reach the public domain, thereby creating a significant time lag. Thus, it is estimated that under optimal circumstances, about 50% of the evidence may be available, while the rest is in some earlier stage of investigation and discovery. This means that only about 10% of complete evidence is available while 50% is needed, but it takes time to conduct the necessary clinical trials. Therefore, 10 to 15 years or more may pass before a practical evidence base is available.

This process has only been initiated in recent years partly because the necessary technologies are just now becoming widely available (Figure 1). Also, there are many tedious steps involved in collecting an answer that are not yet standardized. Collecting evidence is remarkably similar in stages (Figure 1) to all research operations of writing protocols for grants or writing scientific publications—it is “the scientific method.”6 The path includes: 1) asking a clinical question; 2) collecting knowledge in the published literature; 3) assessing the quantity and quality of that information; 4) weighting and/or aggregating the information; and 5) creating a conclusion or answer. Once the answer is available, it can be published in one form or another and used to create new published literature. Often these answers are reported as critical reviews or critical appraisals and collected into evidence libraries. The answers, whether involving small questions or large ones, may not be satisfying if little published information exists. It is much more common for them to be included as part of a summary mentioning that more information or investigation should be done to provide a complete answer.

There are many challenges along the path to producing an answer, including writing a clear, well-defined clinical question. The PICO approach can ensure that this happens.7 It involves breaking apart the clinical question into four parts: P = patients, population, or problem of interest; I = intervention, treatment, or other option for care; C = control or comparison; O = outcome of interest. In many cases, there is also concern for the time period of measurement and a T may be added to the acronym (PICOT).8 Once the question is well formed, a search is conducted in available biomedical databases (eg, PUBMED, Web of Knowledge [Table 1]) to identify information. Quantity and quality considerations are essential. Defining the quality can be quite involved, and currently there is no widely accepted and standardized approach for this. If there were, EBD might become a fully automated process. Meta-analyses and other tools for combining information are helpful as well, but, again, they are not uniform. Locating information to be considered as evidence can now be done relatively quickly compared to two decades ago. Today, with high-speed networks, digital libraries, and open access, this process can occur in seconds. There are applications (APPs) that facilitate this (Table 1).

Evidence appears in many forms. EBM and EBD tend to focus on clinical trials. There is a great variety of evidence to consider, all of which is valuable depending on the particular stage of evidence collection. New procedures or products often precede the clinical trial evidence desired. Early information may have little peer review, even if it is of good quality. After 1 to 2 years, more information typically becomes available as early non-clinical reports are published and peer groups develop Delphi assessments. After 5 to 10 years, the clinical data of interest finally begins to emerge. Different clinical trial types or efforts may assess different patient variables, so the information may continue to accumulate for several years. Figure 2 is an evidence map meant to cover all of these situations. At the top right-hand corner is the best possible evidence—that is, evidence that is highly peer-reviewed, good quality, and includes critical appraisals and meta-analyses. Other similar explanations of quality or confidence in evidence use a linear scale or pyramid and deal only with the methods found in the top right-hand corner of the map. It should be noted, however, that all evidence on the map might have some value at some point.

Clinical Trial Types

Clinical information is collected in a variety of ways,9 and those differences impact the value of the information. Different biases may be included. Different types of calculations may not be possible. Three major parameters of clinical trial designs involve the “timing,” the “sampling method” of the population, and the nature of the “outcomes” being assessed. A quick summary of all types is presented in Figure 3. A more expansive treatise on types is presented by Spilker.10 A trial may be designed as a longitudinal prospective one (to project into the future), a cross-sectional one, or a longitudinal retrospective one. Longitudinal prospective trials allow for careful planning of all the factors to be controlled and the information to be collected. Retrospective ones are limited to what might have been previously planned in a trial. A cross-sectional trial audits the status or current outcome of patients already treated and generally does not include much information about the variables associated with that treatment. Cross-sectional studies are often different from others, due to the limited qualification of the information being collected. In testing the longevity of dental materials, a cross-sectional trial will often report less than half the value of that of prospective longitudinal trials.11

Longitudinal prospective trials may be carefully designed with controls and measures of many key factors, with randomization for patient selection and treatment—ie, randomized controlled trials (RCTs). Less-well-controlled trials may simply be referenced as controlled clinical trials, or CCTs. In dental materials testing, in lieu of controls, the assessment system relies on calibrated evaluators12,13 and published standards for assessment. They are distinguished as standards-based clinical trials, or SCTs.14 If patients are selected for inclusion on the basis of having a condition or risk, the trial is a cohort study. If the study is focused on patients who have already displayed a particular outcome of interest, the study is a case-control study. Additionally, there are also some combinations of these qualifiers as well.

Restorative dental materials present some distinctive challenges for clinical testing. A number of additional factors15 beyond the treatment or choice of materials affect outcomes. These are characterized as: 1) operator factors; 2) design or preparation factors; 3) materials factors; 4) intraoral location factors; and 5) patient factors.Experience strongly hints that operator factors dominate and may represent more than half the risk affecting the outcomes measured.

Two options exist for data collection. The first is to employ only highly trained and calibrated clinicians following specific rules.16,17 Historically, this has been the case for trials managed in clinical research units (CRUs) of universities or institutions. The alternative is to ignore those operator factors and let them affect the results in order to observe outcomes that reflect typical practices. Trials managed this way involve a large number of practices contributing information and are called Practice Based Research Networks (PBRNs). Both types have strengths, but information from PBRNs is more limited in interpretation because the variables are not as well controlled.

Results from CRUs and PBRNs can be quite different and often conflicting. For example, PBRNs allow for restoration replacement when clinically anticipated, not necessarily because of real failure, while CRUs measure performance to the point of failure. Therefore, longevity based on PBRN performance is typically calculated to be only half that of CRUs. Additionally, PBRNs report clinical assessments of secondary caries based on uncalibrated or unconfirmed clinical judgments, which are often skewed by misinterpretation of margin performance, and they report secondary caries as the major reason for restoration failure. In contrast, CRUs report secondary caries as almost non-existent,18-21 and they report restoration fracture as the major failure mode. It must be noted that CRUs usually actively manage caries risk, use inclusion criteria that exclude high-risk patients, and are much more discriminating about what changes in restoration margins connote.

Dental materials research, especially longitudinal prospective clinical trials of materials, historically has not been supported by major sources of research funding such as National Institutes of Health (NIH) until recently (2005), and then only as PBRNs. CRU trials have been supported by dental companies as short-term—ie, 1 to 3 years—efforts. Thus, the base of information for evidence-based dentistry is rather small. This substantially limits the basis for making evidence-based decisions and also prevents efforts, such as correlating laboratory research tests and clinical outcomes, to foreshorten the research and development of new materials.

Aggregation of Clinical Evidence

Rarely are clinical trials large and complete enough in design that they can stand alone in answering a question. A number of variables, such as patient age, gender, risk factors, pre-existing conditions, socioeconomic factors, and trial design biases, are confounders. These challenges can often be managed when there are several good trials in existence by combining the information from the trials. There are statistical approaches such as meta-analyses22 to weigh the quality of the trials, potentially combine the data, and forge a greater understanding of the true answer for a clinical question. An early example of applying this process to posterior composite wear results was reported by Taylor et al.23 If the data cannot be combined, it is possible to systematically review24 and aggregate results in terms of relationships or risks (forest plots) to estimate the true answer. Therefore, critical appraisals or meta-analyses are often described as the “gold standard” of clinical evidence.

Anticipating the need to combine clinical trials, a set of rules has been developed over the past decade or so to provide more uniform data documentation and reporting. The first rule is that to publish a clinical trial, it must be preregistered. Different registration options are possible (Table 1 list of Clinical Trials Registries), but all are public, describe the goals, report the clinical design, indicate the data types collected, and can include information about the management and funding. While somewhat controversial, this rule helps to ensure that the original design of the clinical trial is known. Publication of clinical trial results requires that complete characterization of the design be included. Guidelines in this regard are collected as CONsolidated Standards Of Reporting Trials (CONSORT [Table 1]). Guidelines for aggregating or meta-analyses are published as Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA [Table 1]). A good example of a clinical trial that meets the standard was published recently by Cunha-Cruz.25

While it stands to reason that everyone would like to be able to combine their information with sufficient good clinical trials using these methods, this is rarely possible. It is also the underlying reason why evidence-based dentistry has been characterized as involving only 8% to 10% evidence.

Libraries of EBD Information

To collect the evidence base, a number of approaches have been used during the past 20 years. Of these, the foremost and most formal one is the Cochrane Collaboration, which was created in 1992 and demands good-quality clinical trial information. Systematic reviews in the Cochrane Collaboration are produced by individuals specially trained to adhere to the formal analytic procedures that: 1) define a specific clinical question; 2) perform a comprehensive review to identify all possible literature; 3) follow a peer-reviewed protocol; 4) select studies for inclusion; 5) assess quality of each study; 6) synthesize the findings in an unbiased manner; and 7) interpret the findings in a balanced and impartial summary. Almost invariably, needed RCTs, CCTs, or SCTs are non-existent at present and the conclusions of efforts are either that there is no clinical evidence or its quality is poor. As of October 2013, the Cochrane Collaboration included 152 reports for dentistry, which primarily focused on prevention and dental sealants. Still others have collected the available evidence, rated its quality, and tried to force a limited answer to a clinical question. The American Dental Association (ADA) in the past few years has made a major commitment to collecting evidence and training practitioners (Table 1). The ADA EBD Library currently has collected more than 2,300 references (E. Vassilos, Manager, ADA Center for Evidence-Based Dentistry, personal communication, October 14, 2013) that are clinical trials, meta-analyses, critical appraisals, or references to Cochrane Collaboration reports. The dental school at the University of Texas Health Science Center at San Antonio (UTHSCSA) has approached the problem more practically,26 identifying relatively limited clinical questions, collecting two to five readily available reports, evaluating and rating the quality of those reports, and providing one-page summaries with potential conclusions. These are called “critically appraised topics” (CATs)27 and depend on trained faculty and students to generate them (Table 1).

One of the major benefits of each of these libraries is that they form a new database that can be searched quickly for clinical answers. Rather than repeating the entire EBD process outlined in Figure 1, EBD libraries can be searched, making it possible to receive the answers in seconds. At the University of Michigan, a web-based APP (Table 1) has been developed to provide quick access for PICO searches of the literature or EBD libraries to discover answers to clinical questions. More of these types of portals are anticipated in the very near future.

As with any effort to examine the literature, the evidence is usually not final, but is continuing to develop. Appraisals must be routinely revisited; while there is no formal requirement, the recommendation is often that appraisals be redone every 2 to 5 years. It is important to reiterate that evidence is not truth; it is simply the collection of scientific information to date.

Foundation for Dental Materials

For dental materials, the clinical research effort has been limited, representing only about 10% of all research for many years.5,28 Clinical trials require much more time and expense than laboratory investigations of properties or clinical simulations. Only a few laboratory investigations have shown correlations with actual clinical results.14 This occurs for a variety of reasons. Individual laboratory properties (such as flexural strength) do not line up well with the performance data measured clinically for restoration surfaces and margins. Simulations are attempts to bridge the gap, but they are generally too limited in design, not validated, use water to mimic saliva, and are not conducted long enough to represent reasonable clinical lifetimes. As indicated in Table 2,11 a large range of laboratory properties could be tested. However, researchers face significant challenges in detecting meaningful correlations (C) between laboratory tests and simulations (small circles in Figure 4) and clinical results or combinations of clinical data (small boxes in Figure 4). Relationships (R) may occur with laboratory or clinical results that do not produce correlations. Figure 4 also shows types of factors that typically confound data for the lab and clinic.

Table 2 provides examples of the wide range of laboratory tests performed on dental materials for physical, chemical, mechanical, and biological properties, as well as clinical manipulation and clinical performance properties. Typically, only a couple are tested, and the results do not correlate with clinical properties. Table 2 summarizes the limited range of clinical categories evaluated. The original categories were published by Cvar and Ryge12 while working for the United States Public Health System (USPHS). They are: color match, marginal discoloration, secondary caries, anatomic form, marginal adaptation, and surface texture. The original list has been expanded to include more information, such as: proximal contact, functional occlusion, axial contour, pre- and postoperative sensitivity, restoration retention, and resistance to fracture. Most of these categories concern clinical observations of restoration margins or surfaces, and a rating system (A – Alfa = clinically “ideal”; B – Bravo = clinically “acceptable”; C – Charlie = clinically “unacceptable”) is used to determine clinical steps from idea to failure.

Almost all dental materials trials are short-term (1 to 5 years) and involve low-risk participants. Generally, about 20% of the population is considered at high risk for dental caries. Because of pre-existing restorations, lost teeth, or existing dental caries, that high-risk group is primarily excluded from trials. As a result of these two practices combined, reports of dental restorative materials’ clinical performance are generally very positive. Understanding potential differences or problems, however, requires that failures and their patterns be observed. These are missing from most dental materials clinical trials.

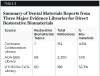

Finally, the prevalence of clinical research reports from the different EBD library databases must be considered. As shown in Table 3, which provides a summary of dental materials reports for three major evidence libraries for direct restorative biomaterials, many of these reports conclude that there was little or no sufficient or good evidence available at the time of the report. The number of reports involving dental materials that appeared in October 2013 in the Cochrane Collaboration, ADA EBD Library, and UTHSCSA CATs library were 12, 89, and 69, respectively. For the first two, fewer than 5% of the libraries discovered information. For the last one, the inclusion of laboratory research in the process elevates it to 32%. It is clear that very little information exists. Compiling the evidence needed to answer 50% of the important questions will still require 15 to 20 more years and much more concerted efforts.

Summary

Evidence for restorative dental materials performance is very limited due to the scarcity of clinical trials. A much greater effort to conduct clinical research over the next 15 to 20 years is required to build the needed evidence base. Fast access to the available known information is already possible.

ABOUT THE AUTHORS

Stephen C. Bayne, MS, PhD

Professor and Chair, Cariology, Restorative Sciences, and Endodontics, School of Dentistry, University of Michigan, Ann Arbor, Michigan

Mark Fitzgerald, DDS, MS

Associate Professor and Vice Chair, Cariology, Restorative Sciences, and Endodontics, School of Dentistry, University of Michigan, Ann Arbor, Michigan

Queries to the author regarding this course may be submitted to authorqueries@aegiscomm.com.

REFERENCES

1. Cochrane AL. Effectiveness and Efficiency. Random Reflections on Health Services. London, England: The Nuffield Provincial Hospitals Trust; 1972.

2. Ismail AI, Bader JD. Evidence-based dentistry in clinical practice. J Am Dent Assoc. 2004;135(1):78-83.

3. Sackett DL, Richardson WS, Rosenberg W, Haynes RB. Evidence-based Medicine: How to Practice and Teach EBM. New York, NY: Churchill Livingstone; 1997.

4. Niederman R, Badovinac R. Tradition-based dental care and evidence-based dental care. J Dent Res. 1999;78(7):1288-1291.

5. Bayne SC, Marshall SJ. 20-year analysis of basic vs applied DMG-IADR research abstracts [abstract]. J Dent Res. 1999;78:130. Abstract 196.

6. Duke ES. The Changing Practice of Restorative Dentistry. Indianapolis, IN: Indiana University School of Dentistry; 2000.

7. Richardson WS, Wilson MC, Nishikawa J, Hayward RS. The well-built clinical question: a key to evidence-based decisions. ACP J Club. 1995;123(3):A12-A13.

8. Toh SL, Messer LB. Evidence-based assessment of tooth-colored restorations in proximal lesions of primary molars. Pediatr Dent. 2007;29(1):8-15.

9. Sainani KL, Popat RA. Understanding study design. PM R. 2011;3(6):573-577.

10. Spilker B. Guide to Clinical Trials. Philadelphia, PA: Lippincott Williams & Wilkins; 1991.

11. Bayne SC. Dental restorations for oral rehabilitation – testing of laboratory properties versus clinical performance for clinical decision-making. J Oral Rehabil. 2007;34(12):921-932.

12. Cvar JF, Ryge G. Criteria for the Clinical Evaluation of Dental Restorative Materials. San Francisco, CA: US Department of Health, Education, and Welfare; 1971.

13. Bayne SC, Schmalz G. Reprinting the classic article on USPHS evaluation methods for measuring the clinical research performance of restorative materials. Clin Oral Investig. 2005;9(4):209-214.

14. Bayne SC. Correlation of clinical performance with ‘in vitro tests’ of restorative dental materials that use polymer-based matrices. Dent Mater. 2012;28(1):52-71.

15. Bayne SC, Heymann HO, Sturdevant JR, et al. Contributing co-variables in clinical trials. Am J Dent. 1991;4(5):247-250.

16. Hickel R, Roulet JF, Bayne SC, et al. Recommendations for conducting controlled clinical studies of dental restorative materials, Part 1: study design. Int Dent J. 2007;57(5):300-302.

17. Hickel R, Roulet JF, Bayne SC, et al. Recommendations for conducting controlled clinical studies of dental restorative materials, Part 2: criteria for evaluation. J Adh Dent. 2007;9 suppl 1:121-147.

18. Brunson WD, Bayne SC, Shurdevant JR, et al. Three-year clinical evaluation of a self-cured posterior composite resin. Dent Mater. 1989;5(2):127-132.

19. Wilder AD Jr, May KN Jr, Bayne SC, et al. Seventeen-year clinical study of ultraviolet-cured posterior composite Class I and II restorations. J Esthet Dent. 1999;11(3):135-142.

20. Swift EJ Jr, Perdigão J, Heymann HO, et al. Eighteen-month clinical evaluation of a filled and unfilled dentin adhesive. J Dent. 2001;29(1):1-6.

21. Swift EJ Jr, Perdigão J, Wilder AD Jr, et al. Clinical evaluation of two one-bottle dentin adhesives at three years. J Am Dent Assoc. 2001;132(8):1117-1123.

22. Crombie IK, Davies HT. What is meta-analysis? In: Evidence-based Medicine? 2nd ed. London, England: Hayward Medical Communications; 2009:1-8. http://www.medicine.ox.ac.uk/bandolier/painres/download/whatis/meta-an.pdf. Accessed November 20, 2013.

23. Taylor DF, Bayne SC, Leinfelder KF, et al. Pooling of long term clinical wear data for posterior composites. Am J Dent. 1994;7(3):167-174.

24. Hemingway P, Brereton N. What is a systematic review? In: Evidence-based Medicine? 2nd ed. London, England: Hayward Medical Communications; 2009:1-8. http://www.medicine.ox.ac.uk/bandolier/painres/download/whatis/syst-review.pdf. Accessed November 20, 2013.

25. Cunha-Cruz J, Stout JR, Heaton LJ, et al. Dentin hypersensitivity and oxalates: a systematic review. J Dent Res. 2011;90(3):304-310.

26. Rugh JD, Sever N, Glass BJ, Matteson SR. Transferring evidence-based information from dental school to practitioners: a pilot “academic detailing” program involving dental students. J Dent Educ. 2011;75(10):1316-1322.

27. Sauvé S, Lee HN, Meade MO, et al. The critically appraised topic: a practical approach to learning critical appraisal. Ann R Coll Physicians Surg Can. 1995;28(7):396-398.

28. Bayne SC, Grayden SK. DMG-IADR Abstract Research Patterns by Type, Focus, and Funding [abstract]. J Dent Res. 2008;87(spec iss A). Abstract 1093.

Related Content:

Flowable Composite Resins: Do They Decrease Microleakage and Shrinkage Stress?

Controlling Biofilm with Evidence-Based Dentifrices

The Current State of Adhesive Dentistry: A Guide for Clinical Practice